The Online Safety Act 2023 is now law and enforcement will be phased in by Ofcom. How should you and your business prepare?

Ambitious plans to make Britain the safest place to be online have recently become law. The Online Safety Act 2023 covers all large social media platforms, search engines, and age restricted online services that are used by people in the UK, regardless of where such companies are based in the world. What does the Act mean for you and your business, and how should you prepare for it? Our complete guide to the new legislation answers five key questions

1. What is the Online Safety Act?

The Online Safety Act (OSA) is a new set of laws aimed at creating a safe online environment for UK users, especially children. Due to be phased in over two years, the law walks a fine line between making companies remove illegal and harmful content, while simultaneously protecting users’ freedom of expression. It has been described as a ‘skeleton law’, offering the bare bones of protection which will be fleshed out in subsequent laws, regulations, and codes of practice.

The OSA has had a long and difficult journey. An early draft first appeared in 2019 when proposals were published in the Online Harms White Paper. This defined “online harms” as content or activity that harms individual users, particularly children, or “threatens our way of life in the UK, either by undermining national security, or by reducing trust and undermining our shared rights, responsibilities and opportunities to foster integration.”

The Act covers any service that hosts user-generated images, videos, or comments, available to users in the UK. It includes messaging applications and chat forums, and therefore potentially applies to the major social media platforms such as X (Twitter), TikTok, Facebook, Instagram, BeReal, Snapchat, WhatsApp, YouTube, Google, and Bing.

Fears of a censor’s charter

Early drafts of the “Online Safety Bill” were described by critics as a censor’s charter, and parts of it have been rewritten over time. The current version might have had its claws clipped but it still has teeth. Repeat offenders will potentially be fined up to £18 million or 10% of global revenue, whichever is greater, and company managers risk going to jail for up to two years.

The Act provides a ‘triple shield’ of protection. Providers must:

- remove illegal content

- remove content that breaches their own terms of service

- provide adults with tools to regulate the content they see

Children will be automatically prevented from seeing dangerous content without having to change any settings.

2. What are the key provisions of the OSA?

The Act targets what it describes as “regulated services”, specifically large social media platforms, search engines, or platforms hosting other user to user services (for example of an adult nature), along with companies providing a combination of these. Precisely which providers in particular the Act will affect won’t be known until the government publishes further details about thresholds.

Providers will have to comply with measures in four key areas:

- Removing illegal content

- protecting children

- restricting fraudulent advertising

- communication offences such as spreading fake but harmful information

2.1 Illegal content

Providers will be expected to prevent adults and children from accessing illegal content. Previously, this was largely restricted to material associated with an act of terrorism or child sexual exploitation. Under the new law, illegal content also includes anything that glorifies suicide or promotes self-harm. Content that is illegal and will need to be removed, includes:

- child sexual abuse

- controlling or coercive behaviour

- extreme sexual violence

- fraud

- hate crime

- inciting violence

- illegal immigration and people smuggling

- promoting or facilitating suicide

- promoting self-harm

- revenge porn

- selling illegal drugs or weapons

- sexual exploitation

- terrorism

Guidance published by the government explains that “platforms will need to think about how they design their sites to reduce the likelihood of them being used for criminal activity in the first place.”

The largest social media platforms will have to give adults better tools to control what they see online. These will allow users to avoid seeing material that is potentially harmful but which isn’t criminal, (providers will have to ensure that children are unable to access such content).

The new tools must be effective and easy to access and could include human moderation, blocking content flagged by other internet users, or sensitivity and warning screens. They will also allow adults to filter out contact from unverified users, which will help stop anonymous trolls from reaching them.

2.2 Protecting children

The OSA affects material assessed as being likely to be seen by children. Providers will have to prevent children from accessing content regarded either as illegal or harmful. The government’s guidance suggests that the OSA will protect children by making providers (in particular social media platforms):

- prevent illegal or harmful content from appearing online, quickly removing it when it does.

- prevent children from accessing harmful and age-inappropriate content.

- enforce age limits and age checking measures.

- ensure the risks and dangers posed to children on the largest social media platforms are more transparent, for example by publishing risk assessments.

- provide parents and children with clear and accessible ways to report problems online when they do arise.

“Harmful” is a grey area. The Act gives the government minister responsible for enforcing the new law (the secretary of state) the power to define “harmful”. The OSA suggests the minister will do so where there is “a material risk of significant harm to an appreciable number of children.” According to the government guidance, harmful content includes:

- pornographic material

- content that does not meet a criminal level but which promotes or glorifies suicide, self-harm or eating disorders

- content that depicts or encourages serious violence

- online abuse, cyberbullying or online harassment

Social media companies set age limits on their platforms, usually excluding children younger than 13. However, many younger children have accounts. The OSA aims to clamp down on this practice.

2.3 Fraudulent advertising

Under the OSA, providers will have to prevent users from seeing fraudulent advertising such as ‘get rich quick’ scams. An advert will be regarded as fraudulent if it falls under a wide range of offences listed in the Act, from criminal fraud to misleading statements in the financial services area. For large social media platforms and search engines, advertising material is fraudulent if it:

(a) is a paid-for advert

(b) amounts to an offence (the OSA lists possible fraud offences) and

(c) (in the case of social media) is not an email, SMS message (or other form of messaging as listed)

Social media platforms and search engines must:

- Prevent individuals encountering fraudulent adverts

- Minimise the length of time such content is available

- Remove material (or stop access to it) as soon as they are made aware of it

Providers must also include clear language in their terms of service regarding the technology they are using to comply with the law.

2.4 Communication offences

Under the OSA, an offence of “false communication” is committed if a message is sent by someone who intended it “to cause non-trivial psychological or physical harm to a likely audience.” The law applies to individual users and “corporate officers” (who can be guilty of neglect), but excludes news publishers and other recognised media outlets.

An offence of “threatening communication” would be committed if someone sends a message that threatens death or serious harm (assault, rape, or serious financial loss), with the intent of making the recipient fear that the threat would be carried out.

The Act also makes it illegal to encourage or assist an act of self-harm. A crime occurs if an offending message is sent, or if someone is shown an offending message (whoever originally wrote it).

Sending flashing images can also be regarded as an offence. Possible prison sentences under this part of the OSA vary depending on the offence but can be up to five years. A company need not be a provider of regulated services to be caught by this part of the law.

Amendments will be made to the 2003 Sexual Offences Act making it illegal to share or threaten to share intimate pictures, if the offender was seeking to cause distress.

3. What are the requirements for age assurance tech?

In recent years, the UK has been edging ever closer to adopting an online age verification system. After passing the Digital Economy Act (2017), Britain became the first country to allow such a system to be implemented. Websites selling pornography would have had to adopt “robust” measures that stopped children accessing their content. However, enforcing this was easier said than done.

The possibility of a wide variety of porn outlets around the world collecting the personal identity data of UK users, led to concerns about breaches of the General Data Protection Regulation (GDPR). The scheme was abandoned in 2019, and at that point the baton was passed to the OSA.

Whether adults accessing pornography will encounter mandatory age assurance under the OSA is still the subject of legislative debate. However, adult content providers will need to ensure that children are not able to see such material. The Act says:

“A provider is only entitled to conclude that it is not possible for children to access a service, or a part of it, if age verification or age estimation is used on the service with the result that children are not normally able to access the service.”

This then may lead to providers committing to age assurance by default to ensure compliance. In its final version, the Act tightens up definitions of ‘assurance’, clarifying how and when this may be provided – whether by estimation tech, verification measures, or both.

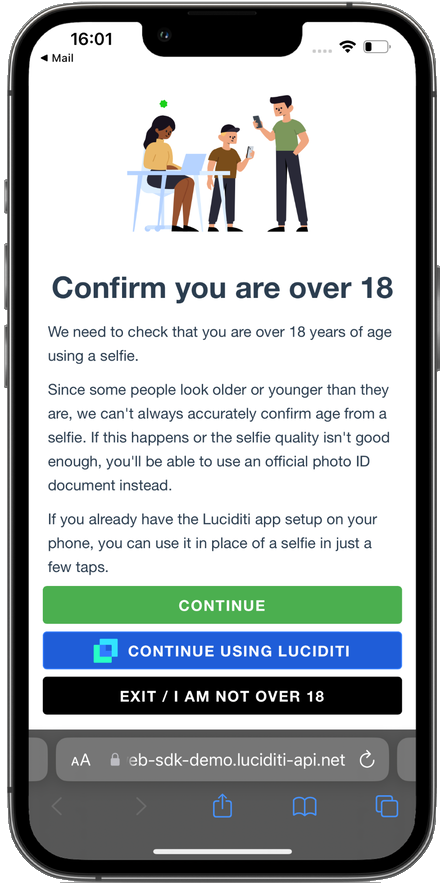

Digital ID providers, such as our own platform Luciditi, use age estimation AI to give quick and easy access to the majority of users. Those close to the age threshold will need to arrange access via age verification, which relies on personal data. Luciditi only sends a simple ‘yes’ or ‘no’ reply to online age restricted access requests. The data itself is securely managed and can’t be seen by third parties. Keeping business operations compliant, Luciditi can be embedded in a client’s website by developers (ours or yours), or even simply via a plug-in.

Under the terms of the Act, providers will have to say what technology they are using, and show they are enforcing their age limits. More detail is expected to be given by Ofcom (see 4, below), later this year. In June 2023, Ofcom said:

“Online pornography services and other interested stakeholders will be able to read and respond to our draft guidance on age assurance from autumn 2023. This will be relevant to all services in scope of Part 5 of the Online Safety Act.”

[Part 5 relates to online platforms showing pornographic content].

Lawyer Nick Elwell-Sutton notes that “whether age verification for children will be a mandatory requirement is still the subject of ongoing consultation, but many service providers may voluntarily seek to implement verification at age 18 to avoid the more stringent child safety requirements.”

Age assurance technology will likely need to conform to existing government standards, including the UK Digital Identity and Attributes Trust Framework (DIATF). Introduced in 2021, DIATF sets the rules, standards, and governance for its digital identity service providers, like Arissian who developed Luciditi. One of the key principles behind DIATF is the need for mutual trust between users and online services, a principle it shares with the OSA.

Iain Corby, executive director for the Age Verification Providers Association, a politically neutral trade body representing all areas of the age assurance ecosystem, commented: “For too long, regulators have neglected enforcement of age restrictions online. We are now seeing their attention shift towards the internet, and those firms which offer goods and services where a minimum age applies, should urgently implement a robust age verification solution to avoid very heavy fines.”

4. How will the OSA be enforced?

Not without difficulty. The OSA will be enforced by Ofcom, the UK’s communications regulator. Ofcom will prepare and monitor a register of providers covered by the law, which may include up to 100,000 companies.

The government funded Ofcom in advance to ensure an immediate start. However, providers will soon have to finance the new measures themselves through regular fees to Ofcom.

Ofcom will not be pursuing individual breaches of the law. It will instead focus on assessing how a provider is meeting the new requirements overall at the risk of being fined, as detailed above. Ofcom will have powers of entry and inspection at a providers’ offices.

In the most extreme cases, with the agreement of the courts, Ofcom will be able to require payment providers, advertisers and internet service providers to stop working with a site, preventing it from generating money or being accessed from the UK.

Criminal action will be taken against those who fail to follow information requests from Ofcom. Senior managers can be jailed for up to two years for destroying or altering information requested by Ofcom, or where a senior manager has “consented or connived in ignoring enforceable requirements, risking serious harm to children.”

The new law will come into effect in a phased approach:

Phase 1: illegal harms duties.

Codes of practice are expected to be published soon after the Act becomes law.

Phase 2: child safety duties and pornography.

Draft guidance on age assurance is due to be published from autumn 2023.

Phase 3: transparency and user empowerment

This is likely to lead to further laws covering additional companies.

5. How should businesses be preparing for the OSA?

While the OSA mainly targets social media platforms and search engines, its measures are of general application. In other words, any business could face enforcement if its actions fall within the scope of the new law.

Businesses concerned about the OSA are advised to carry out a risk assessment covering products and services, complaints procedures, terms of service, and internal processes and policies. Companies should also assess how likely their platforms/products are to be accessed by children.

In particular, businesses will need to identify potential development work, as many obligations imposed by the OSA will require technical solutions and backend changes. Further advice from a legal perspective is available here.

Conclusions

The Wild West nature of the internet is notoriously difficult for any one country to tame. Things will be easier for the UK now that the EU’s Digital Services Act has come into effect, forcing more than 40 online giants including Facebook, X, Google, and TikTok to better regulate content delivered within the EU.

Nevertheless, the UK faces a lonely battle with leading providers, especially those concerned about a part of the Act aimed at identifying terrorism or child sexual exploitation and abuse (CSEA). Until very recently, it had been expected that Ofcom will would be able to insist that a provider uses “accredited technology” to identify terrorism or CSEA content. In other words, a service like WhatsApp – that allows users to send encrypted messages – must develop the ability to breach the encryption and scan the messages for illegal content.

No surprise then that WhatsApp isn’t happy at what has been described as a ‘backdoor’ into end-to-end encryption measures. In April, the platform threatened to leave the UK altogether. Signal and five other messaging services expressed similar concerns. In response, the government has assured tech firms they won’t be forced to scan encrypted texts indiscriminately. Ofcom will only be able to intervene if and when scanning content for illegal material becomes “technically feasible.”

Ofcom will also be able to compel providers to reveal the algorithms used in selecting and displaying content so that it can assess how platforms prevent users from seeing harmful material.

These challenges notwithstanding, campaigners from all sides agree that something is needed even if some remain sceptical about the OSA. Modifications were made to the Act in June, in part guided by the inquest into the death of Molly Russell. In 2017, Molly died at the age of 14 from an act of self-harm after seeing online images that, according to the coroner, “shouldn’t have been available for a child to see.” The OSA may not be perfect. But for the sake of children across the country, it’s at least a step in the right direction.

Want to know more?

Luciditi’s Age Assurance technology can help meet some of the challenges presented by the OSA. If you would like to know more, Contact us for a chat today.

Get in touch